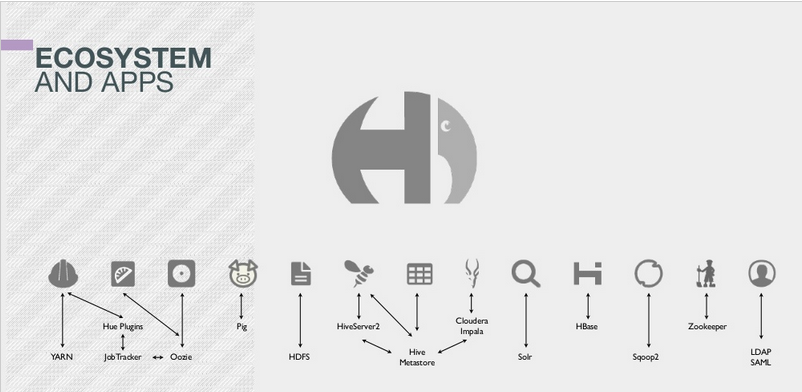

Hue is a lightweight Web server that lets you use Hadoop directly from your browser. Hue is just a ‘view on top of any Hadoop distribution’ and can be installed on any machine.

There are multiples ways (cf. ‘Download’ section of gethue.com) to install Hue. The next step is then to configure Hue to point to your Hadoop cluster. By default Hue assumes a local cluster (i.e. there is only one machine) is present. In order to interact with a real cluster, Hue needs to know on which hosts are distributed the Hadoop services.

Where is my hue.ini?

Hue main configuration happens in a hue.ini file. It lists a lot of options but essentially what are the addresses and ports of HDFS, YARN, Oozie, Hive… Depending on the distribution you installed the ini file is located:

- CDH package: /etc/hue/conf/hue.ini

- A tarball release: /usr/share/desktop/conf/hue.ini

- Development version: desktop/conf/pseudo-distributed.ini

- Cloudera Manager: CM generates all the hue.ini for you, so no hassle 😉 /var/run/cloudera-scm-agent/process/`ls -alrt /var/run/cloudera-scm-agent/process | grep HUE | tail -1 | awk ‘{print $9}'`/hue.ini

**

Note:** To override a value in Cloudera Manager, you need to enter verbatim each mini section from below into the Hue Safety Valve: Hue Service → Configuration → Service-Wide → Advanced → Hue Service Advanced Configuration Snippet (Safety Valve) for hue_safety_valve.ini

At any time, you can see the path to the hue.ini and what are its values on the /desktop/dump_config page. Then, for each Hadoop Service, Hue contains a section that needs to be updated with the correct hostnames and ports. Here is an example of the Hive section in the ini file:

[beeswax]

# Host where HiveServer2 is running.

hive_server_host=localhost

To point to another server, just replaced the host value by ‘hiveserver.ent.com’:

[beeswax]

# Host where HiveServer2 is running.

hive_server_host=hiveserver.ent.com

**Note: **Any line starting with a # is considered as a comment so is not used.

**Note: **The list of mis-configured services are listed on the /about/admin_wizard page.

**Note: **After each change in the ini file, Hue should be restarted to pick it up.

Note: In some cases, as explained in how to configure Hadoop for Hue documentation, the API of these services needs to be turned on and Hue set as proxy user.

Removing Apps

This article shows how to configure Hue to not show certain apps. The list of all the apps is available on the /desktop/dump_config page of Hue.

Here are the main sections that you will need to update in order to have each service accessible in Hue:

HDFS

This is required for listing or creating files. Replace localhost by the real address of the NameNode (usually http://localhost:50070).

Enter this in hdfs-site.xml to enable WebHDFS in the NameNode and DataNodes:

dfs.webhdfs.enabled

true

Configure Hue as a proxy user for all other users and groups, meaning it may submit a request on behalf of any other user. Add to core-site.xml:

hadoop.proxyuser.hue.hosts

*

hadoop.proxyuser.hue.groups

*

Then, if the Namenode is on another host than Hue, don't forget to update in the hue.ini:

[hadoop]

[[hdfs_clusters]]

[[[default]]]

# Enter the filesystem uri

fs_defaultfs=hdfs://localhost:8020

# Use WebHdfs/HttpFs as the communication mechanism.

# Domain should be the NameNode or HttpFs host.

webhdfs_url=http://localhost:50070/webhdfs/v1

YARN

The Resource Manager is often on http://localhost:8088 by default. The ProxyServer and Job History servers also needs to be specified. Then Job Browser will let you list and kill running applications and get their logs.

[hadoop]

[[yarn_clusters]]

[[[default]]]

# Enter the host on which you are running the ResourceManager

resourcemanager_host=localhost

# Whether to submit jobs to this cluster

submit_to=True

# URL of the ResourceManager API

resourcemanager_api_url=http://localhost:8088

# URL of the ProxyServer API

proxy_api_url=http://localhost:8088

# URL of the HistoryServer API

history_server_api_url=http://localhost:19888

Hive

Here we need a running HiveServer2 in order to send SQL queries.

[beeswax]

# Host where HiveServer2 is running.

hive_server_host=localhost

Note:

If HiveServer2 is on another machine and you are using security or customized HiveServer2 configuration, you will need to copy the hive-site.xml on the Hue machine too:

[beeswax]

# Host where HiveServer2 is running.

hive_server_host=localhost

\# Hive configuration directory, where hive-site.xml is located

hive_conf_dir=/etc/hive/conf

Impala

We need to specify one of the Impalad address for interactive SQL in the Impala app.

[impala]

# Host of the Impala Server (one of the Impalad)

server_host=localhost

Solr Search

We just need to specify the address of a Solr Cloud (or non Cloud Solr), then interactive dashboards capabilities are unleashed!

[search]

# URL of the Solr Server

solr_url=http://localhost:8983/solr/

Oozie

An Oozie server should be up and running before submitting or monitoring workflows.

[liboozie]

# The URL where the Oozie service runs on.

oozie_url=http://localhost:11000/oozie

Pig

The Pig Editor requires Oozie to be setup with its sharelib.

HBase

The HBase app works with a HBase Thrift Server version 1. It lets you browse, query and edit HBase tables.

[hbase]

# Comma-separated list of HBase Thrift server 1 for clusters in the format of '(name|host:port)'.

hbase_clusters=(Cluster|localhost:9090)

Sentry

Hue just needs to point to the machine with the Sentry server running.

[libsentry]

# Hostname or IP of server.

hostname=localhost

And that’s it! Now Hue will let you do Big Data directly from your browser without touching the command line! You can then follow-up with some tutorials.

As usual feel free to comment and send feedback on the hue-user list or @gethue!