Guest post from Andrew that we regularly update (Dec 19th 2014)

I decided to deploy Hue 3.7, from tarballs (note, other sources like packages from the ‘Install’ menu above would work too), on HDP 2.2 recently and wanted to document some notes for anyone else looking to do the same.

Deployment Background:

- Node Operating System: CentOS 6.6 - 64bit

- Cluster Manager: Ambari 1.7

- Distribution: HDP 2.2

- Install Path (default): /usr/local/hue

- HUE User: hue

After compiling (some hints there), you may run into out of the box/post-compile startup issues.

-

Be sure to set the appropriate Hue proxy user/groups properties in your Hadoop service configurations (e.g. WebHDFS/WebHCat/Oozie/etc)

-

Don't forget to configure your Hue configuration file ('/usr/local/hue/desktop/conf/

hue.ini’) to use FQDN hostnames in the appropriate places

Startup

Hue uses an SQLite database by default and you may find the following error when attempting to connect to HUE at its default port (e.g. fqdn:8888)

-

… File “/usr/local/hue/build/env/lib/

python2.6/site-packages/Django -1.4.5-py2.6.egg/django/db/ backends/sqlite3/base.py”, line 344, in execute return Database.Cursor.execute(self, query, params) DatabaseError: unable to open database file -

SQLlite uses a file to store its databases, so this error most likely occurs due to invalid ownership settings for HUE-related files.

- We can fix this with the command ‘chown hue:hue -R /usr/local/hue’

-

For non development usage, we recommend to startup with MySql instead of SqlLite: https://github.com/cloudera/<wbr />hue/blob/master/desktop/conf.

dist/hue.ini#L292

Removing apps

<div>

<p>

<pre><code class="bash">[desktop]<br /> app_blacklist=impala<br /> </code></pre>

</p>

</div>

HDFS

<div>

</div>

<div>

<div>

<div>

Did you remember to configure proxy user hosts and groups for your HDFS service configuration?

</div>

<p>

With Ambari, you can review your cluster's HDFS configuration, specifically under the "Custom core-site.xml" subsection:

</p>

</div>

<p>

There should be two (2) new/custom properties added to support the HUE File Browser:

</p>

<p>

<pre><code class="xml"><property><br /> <name>hadoop.proxyuser.hue.hosts</name><br /> <value>*</value><br /> </property><br /> <property><br /> <name>hadoop.proxyuser.hue.groups</name><br /> <value>*</value><br /> </property><br /> </code></pre>

</p>

<p>

With Ambari, you can go to the HDFS service settings and find this under "General"

</p>

</div>

<p>

- The property name is dfs.webhdfs.enabled ("WebHDFS enabled), and should be set to "true" by default.

</p>

</div>

<p>

- If a change is required, save the change and start/restart the service with the updated configuration.

</p>

<p>

Ensure the HDFS service is started and operating normally.

</p>

</div>

<p>

- You could quickly check some things, such as HDFS and WebHDFS by checking the WebHDFS page:

</p>

</div>

<p>

- http://<NAMENODE-FQDN>:50070/ in a web browser or 'curl <NAMENODE-FQDN>:50070

</p>

</div>

</div>

<p>

Check if the processes are running using a shell command on your NameNode:

</p>

- 'ps -ef | grep "NameNode"

<div>

By default your HDFS service(s) may not be configured to start automatically (e.g. upon boot/reboot).

</div>

<div>

Check the HDFS logs to see if the namenode service had trouble starting or started successfully:

</div>

<div>

- These are typically found at '/var/log/hadoop/hdfs/'

</div>

</div>

</div>

</div>

</div>

</div>

</div>

</div>

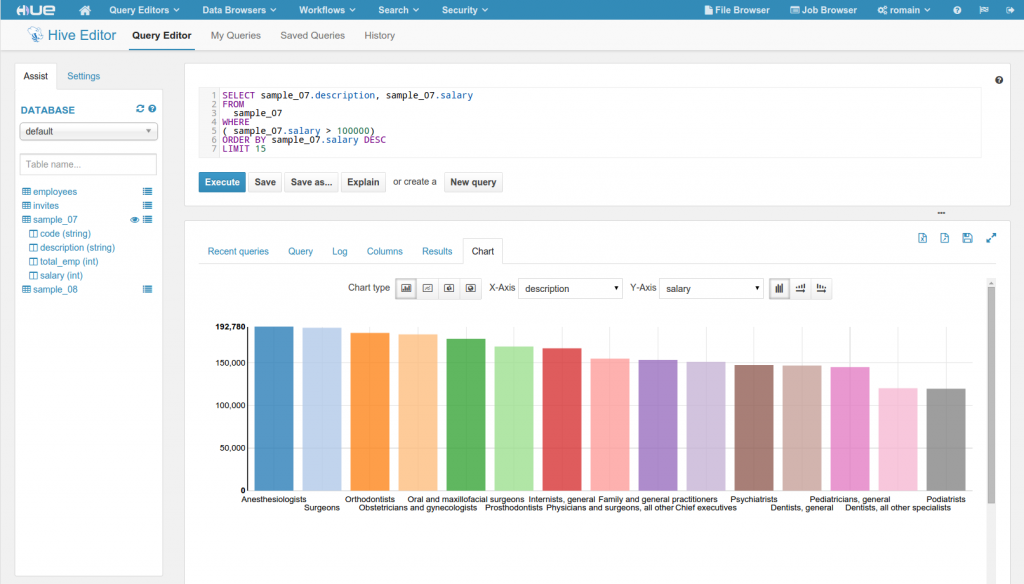

Hive Editor

-

We'll need to change the properties of our Hive configuration to work with the HUE Hive Editor ('hive.server2.authentication=

'NOSASL').

<div>

<p>

<pre><code class="bash">"Server does not support GetLog()"</code></pre>

</p>

</div>

<div>

</div>

<div>

In HDP 2.2, that includes Hive 0.14 and <a href="https://issues.apache.org/jira/browse/HIVE-4629">HIVE-4629</a>, you will need this <a href="https://github.com/cloudera/hue/commit/6a0246710f7deeb0fd2e1f2b3b209ad119c30b72">commit</a> from Hue 3.8 (coming-up at the end of Q1 2015) or use master, and enable it in the hue.ini:

</div>

<div>

<div>

<div>

<p>

<pre><code class="bash">[beeswax]<br /> # Choose whether Hue uses the GetLog() thrift call to retrieve Hive logs.<br /> # If false, Hue will use the FetchResults() thrift call instead.<br /> use_get_log_api=false<br /> </code></pre>

</p>

</div>

</div>

</div>

Security - HDFS ACLs Editor

By default, Hadoop 2.4.0 does not enable HDFS file access control lists (FACLs)

- AclException: The ACL operation has been rejected. Support for ACLs has been disabled by setting dfs.namenode.acls.enabled to false. (error 403)

-

We'll need to change the properties of our HDFS namenode service to enable FACLs ('dfs.namenode.acls.enabled'='

true')

Spark

<div>

We are improving the Spark Editor and might change the Job Server and stuff is still pretty manual/not recommend for now.

</div>

</div>

<div>

</div>

<h1>

HBase

</h1>

<p>

Currently not tested (should work with <a href="https://gethue.com/the-web-ui-for-hbase-hbase-browser/" target="_blank" rel="noopener noreferrer">Thrift Server 1</a>)

</p>

<div class="ajR" tabindex="0" data-tooltip="Show trimmed content">

<h1>

Job Browser

</h1>

<div>

Progress has never been entirely accurate for Map/Reduce completions - always shows the percentage for Mappers vs Reducers as a job progresses. "Kill" feature works correctly.

</div>

<div>

</div>

<div>

<h1>

Oozie Editor/Dashboard

</h1>

<p>

<strong>Note</strong>: when Oozie is deployed via Ambari 1.7, for HDP 2.2, the sharelib files typically found at /usr/lib/oozie/ are missing, and in turn are not staged at hdfs:/user/oozie/share/lib/ ...

</p>

<div>

</div>

<div>

I'll check this against an HDP 2.1 deployment and write the guys at Hortonworks an email to see if this is something they've seen as well.

</div>

<div>

</div>

</div>

</div>

<h1>

Pig Editor

</h1>

<div>

Make sure you have at least 2 nodes or tweak YARN to be able to launch two apps at the same time (<a href="http://blog.cloudera.com/blog/2014/04/apache-hadoop-yarn-avoiding-6-time-consuming-gotchas/">gotcha #5</a>) and Oozie is configured correctly.

</div>

<div>

</div>

<div>

The Pig/Oozie log looks like this:

</div>

<div>

</div>

<div>

<div>

<p>

<pre><code class="bash">2014-12-15 23:32:17,626 INFO ActionStartXCommand:543 - SERVER[hdptest.construct.dev] USER[amo] GROUP[-] TOKEN[] APP[pig-app-hue-script] JOB[0000001-141215230246520-<wbr />oozie-oozi-W] ACTION[0000001-<wbr />141215230246520-oozie-oozi-W@:<wbr />start:] Start action [0000001-141215230246520-<wbr />oozie-oozi-W@:start:] with user-retry state : userRetryCount [0], userRetryMax [0], userRetryInterval [10]

</p>

<p>

2014-12-15 23:32:17,627 INFO ActionStartXCommand:543 - SERVER[hdptest.construct.dev] USER[amo] GROUP[-] TOKEN[] APP[pig-app-hue-script] JOB[0000001-141215230246520-<wbr />oozie-oozi-W] ACTION[0000001-<wbr />141215230246520-oozie-oozi-W@:<wbr />start:] [***0000001-141215230246520-<wbr />oozie-oozi-W@:start:***]Action status=DONE

</p>

<p>

2014-12-15 23:32:17,627 INFO ActionStartXCommand:543 - SERVER[hdptest.construct.dev] USER[amo] GROUP[-] TOKEN[] APP[pig-app-hue-script] JOB[0000001-141215230246520-<wbr />oozie-oozi-W] ACTION[0000001-<wbr />141215230246520-oozie-oozi-W@:<wbr />start:] [***0000001-141215230246520-<wbr />oozie-oozi-W@:start:***]Action updated in DB!

</p>

<p>

2014-12-15 23:32:17,873 INFO ActionStartXCommand:543 - SERVER[hdptest.construct.dev] USER[amo] GROUP[-] TOKEN[] APP[pig-app-hue-script] JOB[0000001-141215230246520-<wbr />oozie-oozi-W] ACTION[0000001-<wbr />141215230246520-oozie-oozi-W@<wbr />pig] Start action [0000001-141215230246520-<wbr />oozie-oozi-W@pig] with user-retry state : userRetryCount [0], userRetryMax [0], userRetryInterval [10]<br /> </code></pre>

</p>

</div>

</div>