Hue continues its progress to make the Cloud platforms easier to use.

We’re happy to preset compatibility with Microsoft Azure Data Lake Store Gen2 (ADLS Gen2). Hue 4.6 release brings the ability to read and write from a configured ADLS Gen2. Almost like ADLS, users can save data to ADLS Gen2 without copying or moving to HDFS. The difference between ADLS Gen1 and Gen2 is that ADLS Gen2 does not rely on the HDFS driver. Instead, it provides its own driver to manage its own filesystems and

In case you missed the one for ADLS (also known as ADLS Gen 1), here is the link to the post.

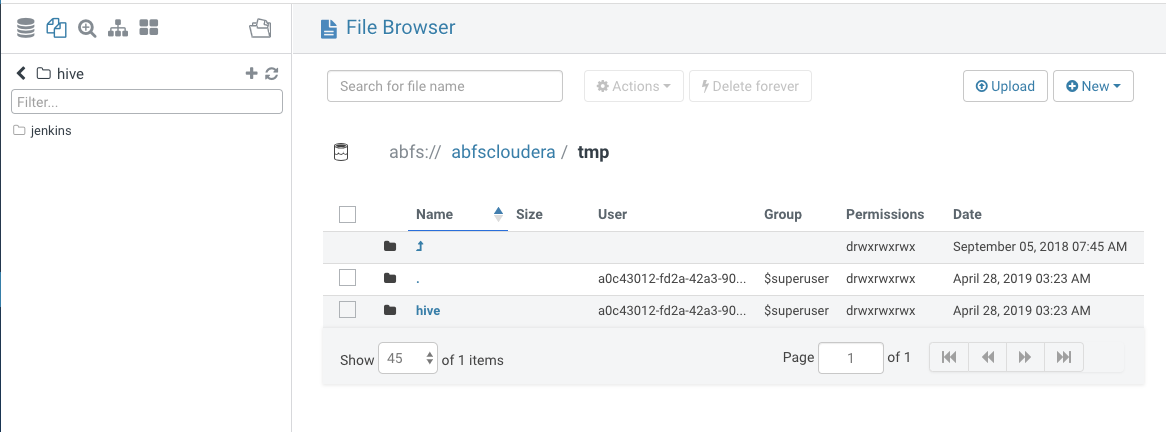

Exploring ADLS in Hue’s file browser

Once Hue is configured to connect to ADLS Gen2, we can view all accessible folders within the account by clicking on ABFS (Azure Blob Filesystem). The reason why it is listed as ABFS is because ADLS Gen2 branches off from hadoop and uses its own driver called ABFS. Through ABFS, we view filesystems, directories and files along with creating, renaming, moving, copying, or deleting existing directories and files. We also allowed files to uploaded to ADLS Gen2. (In other words, it can do the basics of file system management. Though, files can not be uploaded to the root directory)

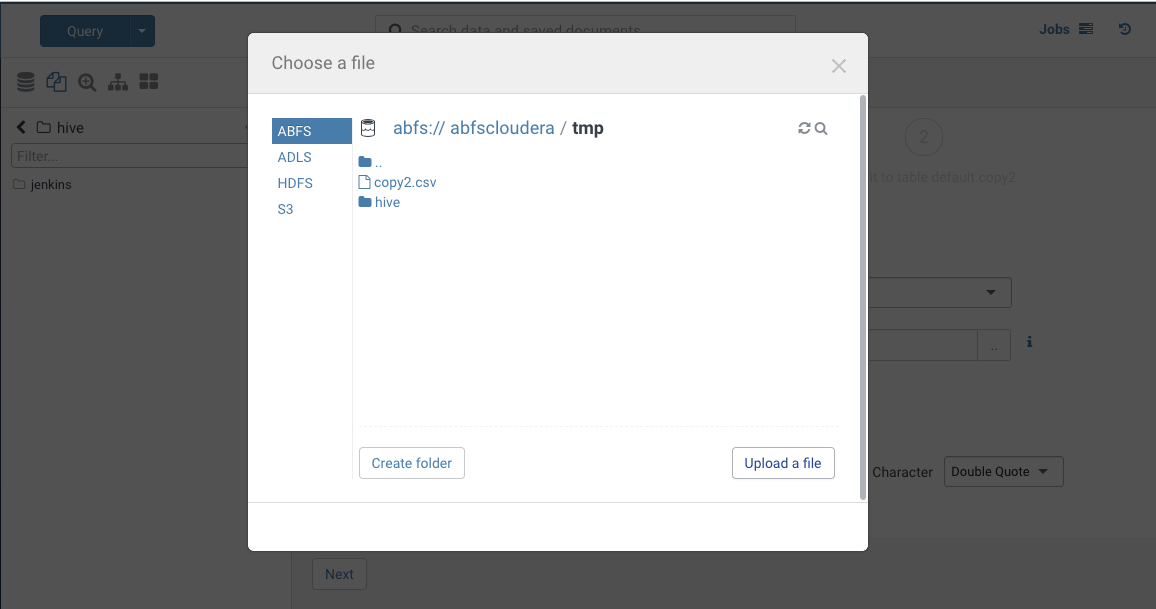

Create Hive Tables directly from ADLS Gen2

As mentioned in ADLS Blog post, Hue’s import wizard can create external Hive tables directly from files in ADLS Gen2 to be queried via SQL from Hive. Impala needs some more testing. To create an external Hive table from ADLS, navigate to table browser, select the desired database and then select the New icon. Select a file using the file picker and browse to a file on ADLS.

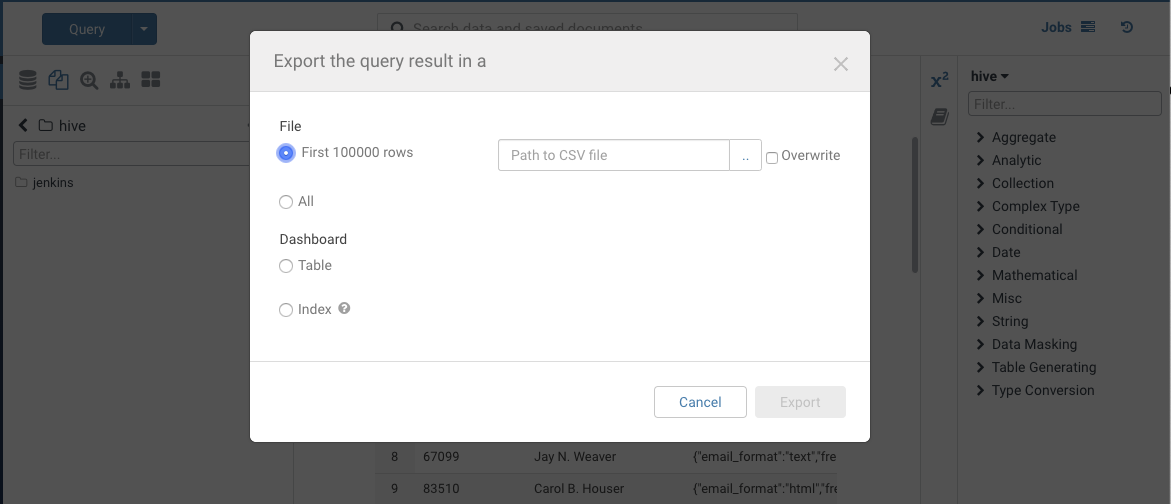

Save Query Results to ADLS Gen2

If you want to save your tables to ADLS Gen2, you can do so. Select ABFS as your filesystem and export the table. The table data should be saved to ABFS. Table data cannot be saved in the root directory.

ADLS Gen2 Configuration

Hue’s file browser allows users to explore, manage, and upload data in both versions of ADLS.

As a result of adding ADLS Gen 2, the configuration for ADLS will be different. Nonetheless, the same client id, client secret and tenant ID can be used for ADLS Gen2. The same scripts can also be used to output the actual access key and secret key to stdout to be read by Hue. To use script files, add the following section to your hue.ini configuration file:

[azure]

[[azure_accounts]]

[[[default]]]

client_id_script=/path/to/client_id_script.sh

client_secret_script=/path/to/client_secret_script.sh

tenant_id_script=/path/to/tenant_id_script.sh</pre>

[[abfs_clusters]]

[[[default]]]

fs_defaultfs=abfs://<filesystem_name>@<account_name>.dfs.core.windows.net

webhdfs_url=https://<account_name>.dfs.core.windows.net

If ADLS configuration exists just add the following to the end of “[azure]”:

[[abfs_clusters]]

[[[default]]]

fs_defaultfs=abfs://<filesystem_name>@<account_name>.dfs.core.windows.net

webhdfs_url=https://<account_name>.dfs.core.windows.net

Integrating Hadoop with ADLS Gen2

If ADLS Gen 1 is configured to Hadoop, then ADLS Gen2 should be able to use the same authentication credentials in order to read from and save to ADLS. Assuming that ADLS Gen1 credentials does not work or that the user does not have it, users can set the following properties in the core-site.xml file.

<property>

<name>fs.azure.account.auth.type</name>

<value>OAuth</value>

</property>

<property>

<name>fs.azure.account.oauth.provider.type</name>

<value>org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider</value>

</property>

<property>

<name>fs.azure.account.oauth2.client.id</name>

<value>azureclientid</value>

</property>

<property>

<name>fs.azure.account.oauth2.client.secret</name>

<value>azureclientsecret</value>

</property>

<property>

<name>fs.azure.account.oauth2.client.endpoint</name>

<value>https://login.microsoftonline.com/${azure_tenant_id}/oauth2/token</value>

</property>

Hue integrates with HDFS, S3, ADLS v1/v2 and soon Google Cloud Storage. So go query data in these storages!