If you’ve ever struggled with configuring Hue to allow your users to create new SQL tables from CSV files on their own in the public Cloud, you’ll be happy to learn that this is now much easier.

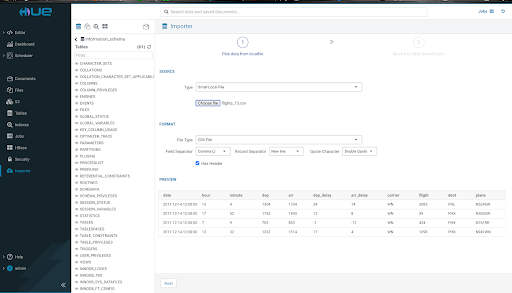

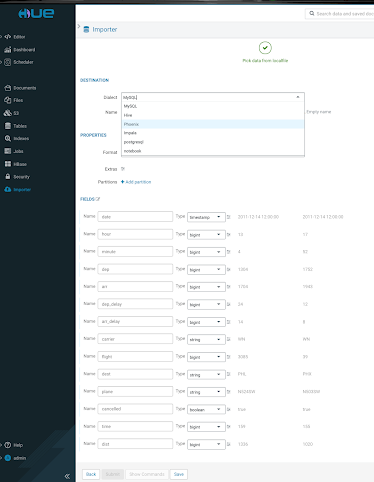

If you're a pro Hue user, then you might be familiar with the Hue Importer. It lets you create tables from a file. Until now, the file had to be available on HDFS or cloud object storage, such as S3 or ABFS. Now you can browse and select files from your computer to create tables with different SQL dialects in Hue. Apache Hive, Apache Impala, Apache Phoenix, MySql dialects are supported.

Goal

- Upload files using the Hue Importer independent of the source.

Why

- Not everyone has access to HDFS or S3/ABFS. Often, Business Analysts need to quickly analyze data sets that they have on their computers and skip data cleanup or other data engineering tasks.

- This feature enables you to import files from your computer and create tables in a few clicks.

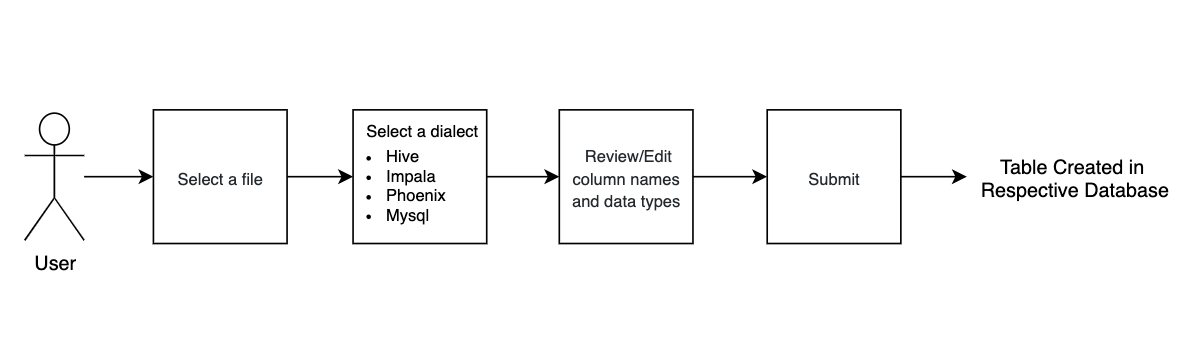

Steps to create a table

Workflow

Files and APIs

- We have used three APIs to implement this feature.

- Guess_format (to guess the file format)

- Guess_field_types (to guess the column types)

- Importer_submit (to create a table)

- If you are curious about how various SQL dialects have been implemented, then take a look at the sql.py file.

Note- Currently, Hue supports smaller CSV files containing a few thousand rows.

Intermediate steps

- step 1

You select a file, Hue guesses the file format, identifies the delimiters, and generates a table preview. - step 2

You select a SQL dialect and Hue auto-detects the column data types. You can edit column names and their data type.

Now it’s time to play with this feature in the latest Hue or at demo.gethue.com.

This project gladly welcome contributions for supporting more SQL dialects.

Any feedback or question? Feel free to comment here or on the Forum and quick start SQL querying!

Onwards!

Ayush from the Hue Team