The following steps will successfully guide you to execute a Shell Action form Oozie Editor.

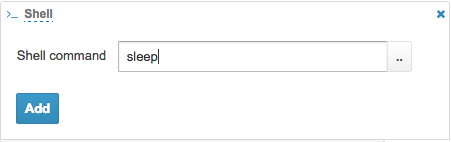

If the executable is a standard Unix command, you can directly enter it in the Shell Command field and click Add button.

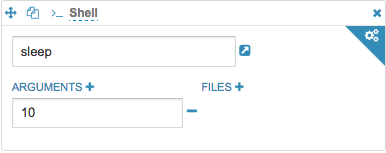

Arguments to the command can be added by clicking the Arguments+ button.

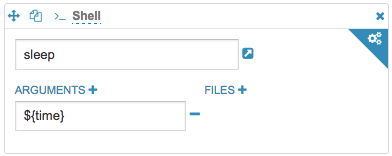

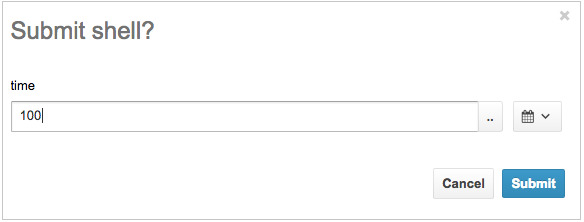

${VARIABLE} syntax will allow you to dynamically enter the value via Submit popup.

If using Hue version less than 4.3 (it is automated from then):

If the executable is a script instead of a standard UNIX command, it needs to be copied to HDFS and the path can be specified by using the File Chooser in Files+ field.

#!/usr/bin/env bash

sleep

Additional Shell-action properties can be set by clicking the settings button at the top right corner.

Next version will support direct script path along with standard UNIX commands in Shell Command field making it even more intuitive.