Hello Oozie users,

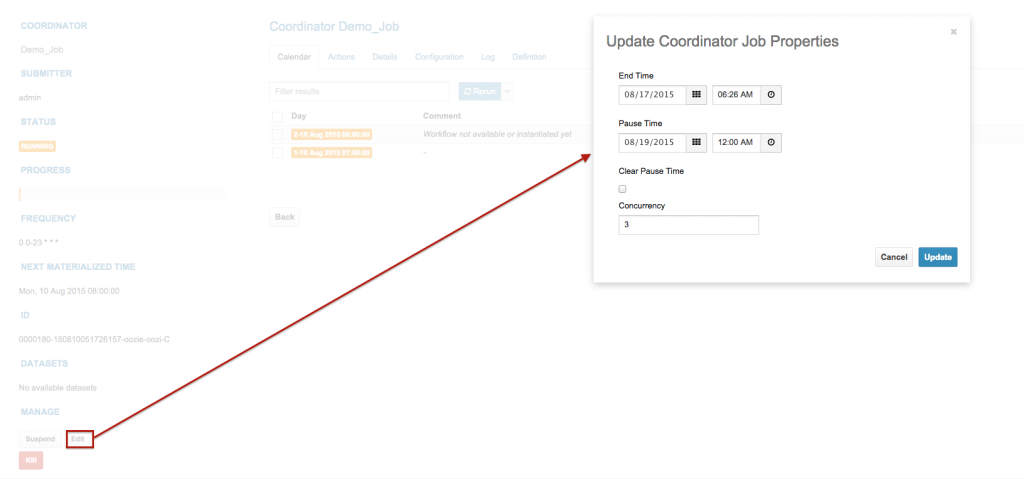

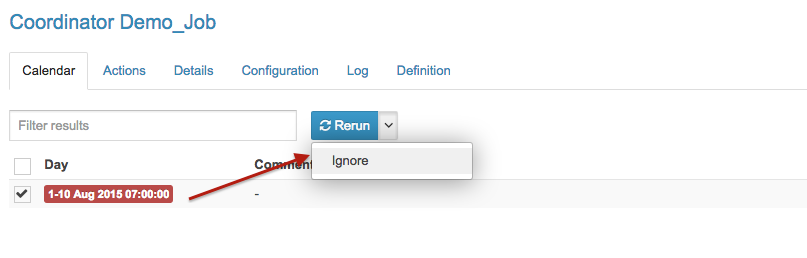

Hue 3.9 release comes with several improvements for Oozie dashboard making it more robust and scalable.

Here is a video demoing the new features:

The new feature list:

Next!

Our focus in the near future will be on improving the usability like Easier update of a scheduled workflow and Better Workflow action dashboard navigation. So, feel free to suggest new improvements or comment on the hue-user list or @gethue!