Oozie is one of the initial major first app in Hue. We are continuously investing in making it better and just did a major jump in its editor (to learn about the improvements in the Dashboard in the other post).

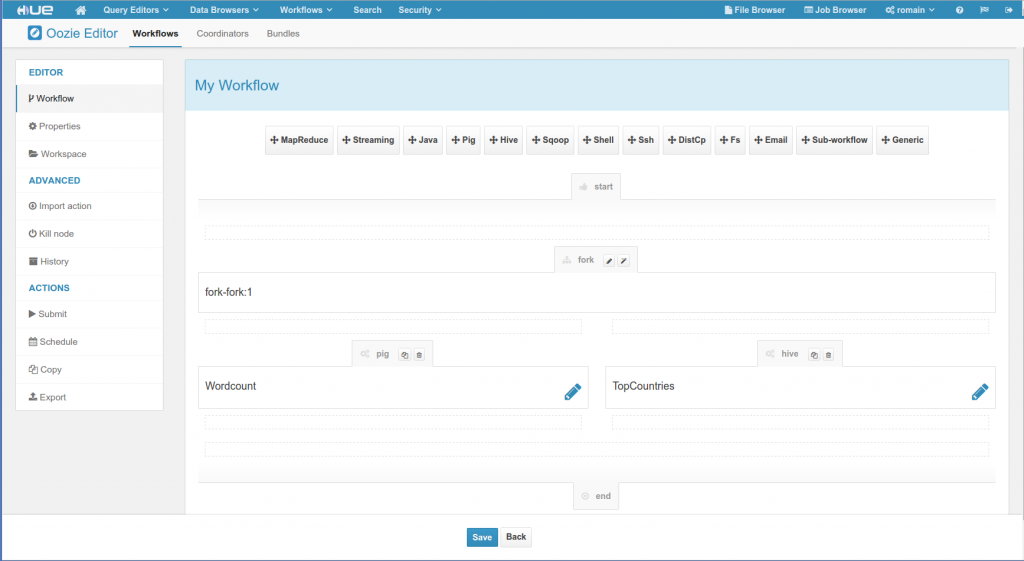

This revamp of the Oozie Editor brings a new look and requires much less knowledge of Oozie! Workflows now support tens of new functionalities and require just a few clicks to be set up!

The files used in the videos comes with the Oozie Examples.

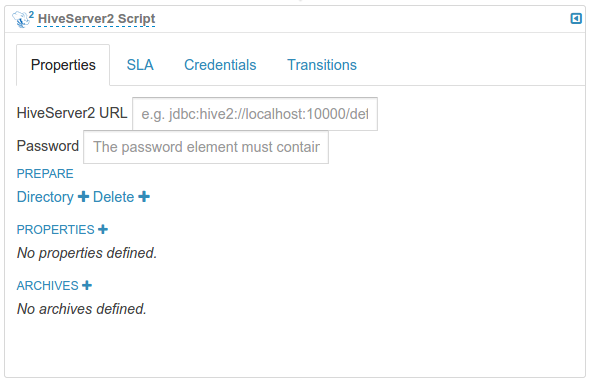

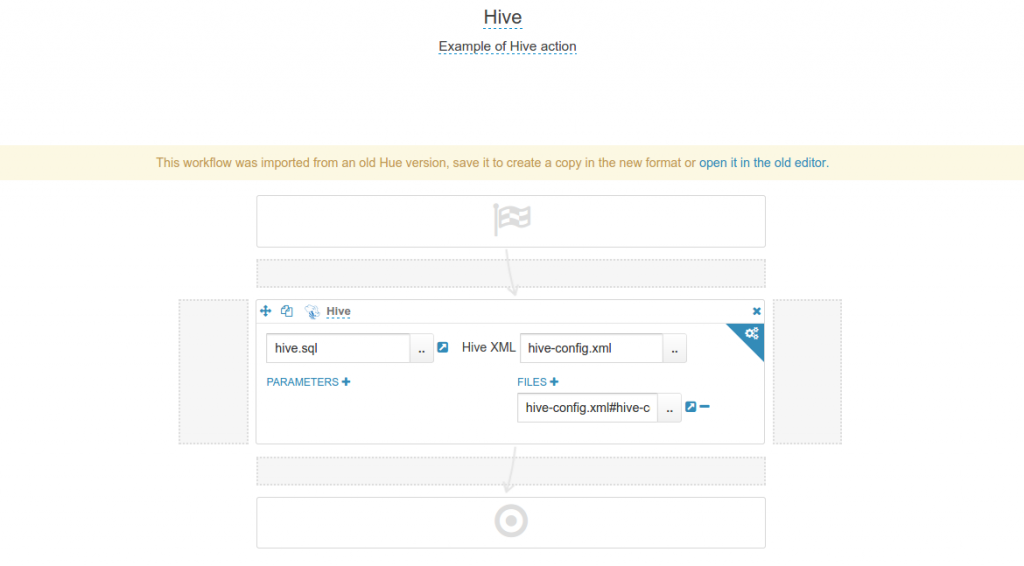

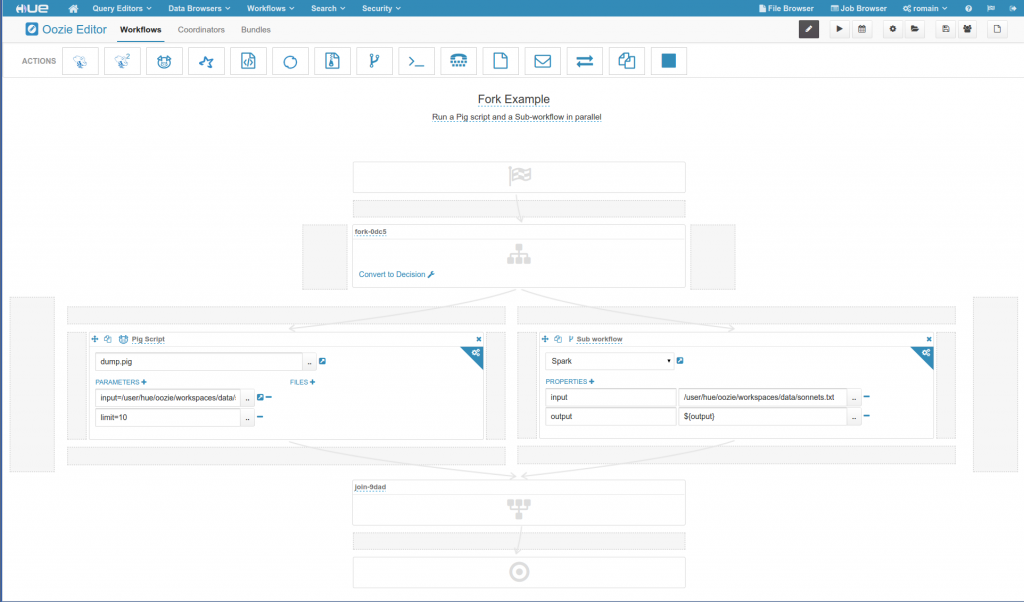

In the new interface, only the most important properties of an action are asked to be filled, and quick-links for verifying path and other jobs are offered. Hive and Pig script files are parsed in order to extract the parameters and directly propose them with autocomplete. The advanced functionalities of an action are available in a new kind of popup with much less frictions, as it just overlaps with the current node.

Two new actions have been added:

- HiveServer2

- Spark

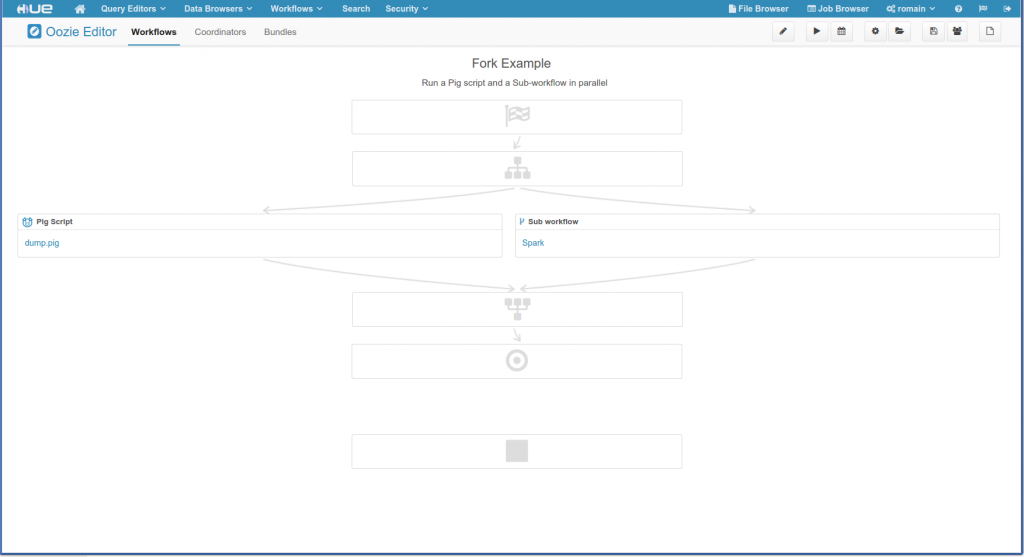

And the user experience o Pig and Sub-workflows is simplified.

Decision node support has been improved, copying an existing action is also now just a way of drag & dropping. Some layout are now possible as the ‘ok’ and ‘end’ nodes can be individually changed.

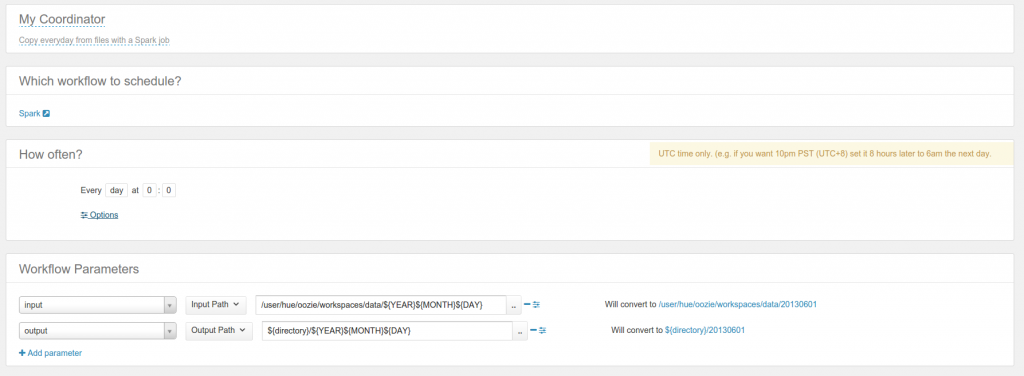

Coordinators have been vastly improved! The notion of Oozie datasets is not needed anymore. The editor pulls the parameters of your workflow and offers 3 types of inputs:

- parameters: constant or Oozie EL function like time

- input path: parameterize an input path dependency and wait for it to exist, e.g.

- output path: like an input path but does not need to exist for starting the job

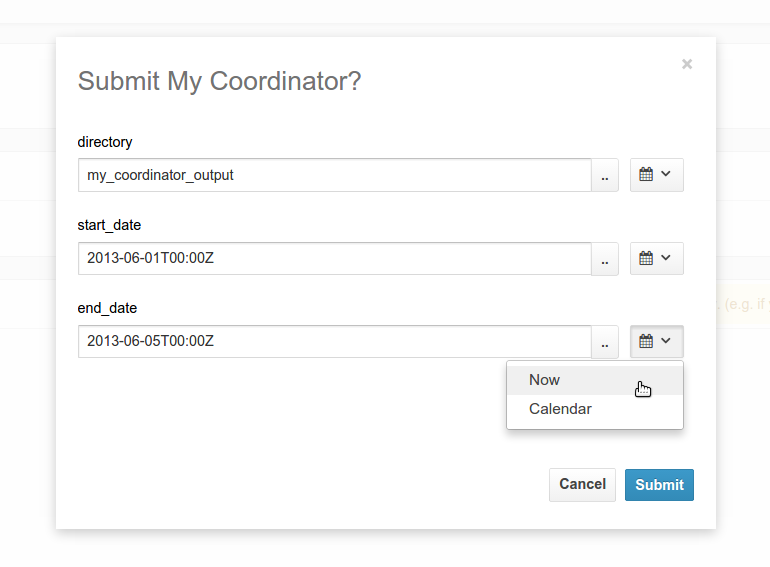

The dreaded UTC time zone format is not directly provided either by the calendar or with some helper widgets.

Sum-up

In addition to providing a friendlier end user experience, this new architecture opens up for innovations.

First, it makes it easy to add new Oozie actions in the editor. But most importantly, workflows are persisted using the new Hue document model, meaning their import/export is simplified and will be soon available directly from the UI. This model also enables the future generation of your workflows by just drag & dropping saved Hive, Pig, Spark jobs directly in the workflow. No need to manually duplicate your queries on HDFS!

This also opens the door of one click scheduling of any jobs saved in Hue as the coordinators are much simpler to use now. While we are continuing to polish the new editor, the Dashboard section of the app will see a major revamp next!

As usual feel free to comment on the hue-user list or @gethue!

Note

Old workflows are not automatically convert to the new format. Hue will try to import them for you, and open them in the old editor in case of problems.

A new export and / export is planned for Hue 4. It will let you export workflows in both XML / JSON Hue format and import from Hue’s format.