December 8th 2015 update: this post is now deprecated as of Hue 3.9: https://gethue.com/automatic-high-availability-and-load-balancing-of-hue-in-cloudera-manager-with-monitoring/</a></em></span>

By default, Hue installs on a single machine, which means Hue is both constrained to that machine’s CPU and memory, which can limit the total number of active users before Hue becomes unstable. Furthermore, even a lightly loaded machine could crash, which would bring Hue out of service. This tutorial demonstrates hue-lb-example, an example load balancer that can automatically configure NGINX and HAProxy for a Cloudera Manager-managed Hue.

Before we demonstrate its use, we need to install a couple things first.

Configuring Hue in Cloudera Manager

Hue should be set up on at least two of the nodes in Cloudera Manager and be configured to use a database like MySQL, PostgreSQL, or Oracle configured in a high availability manner. Furthermore, the database must be configured to be accessible from all the Hue instances. You can find detailed instructions on setting up or migrating the database from SQLite here.

Once the database has been set up, the following instructions describe setting up a fresh install. If you have an existing Hue, jump to step 5.

- From Cloudera Manager

- Go to “Add a Service -> Hue”, and follow the directions to create the first Hue instance.

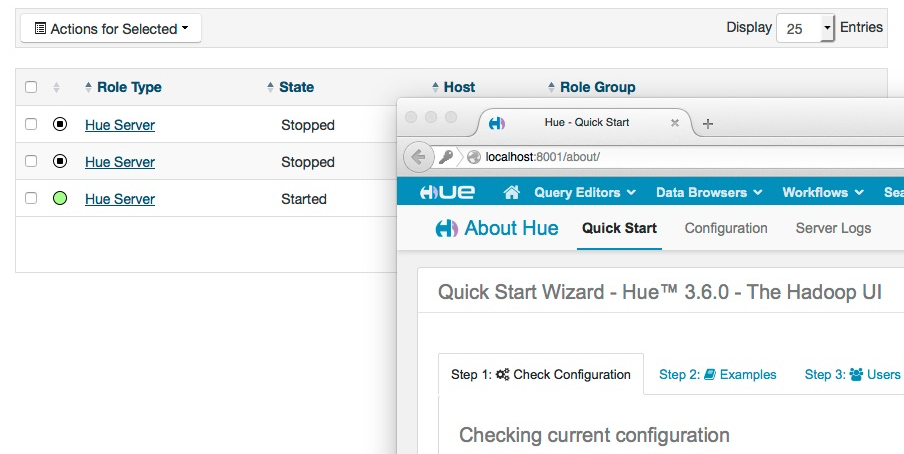

[<img class="aligncenter wp-image-2047" src="https://cdn.gethue.com/uploads/2015/01/hue-lb-0-1024x547.png" />

]4

3. Once complete, stop the Hue instance so we can change the underlying database.

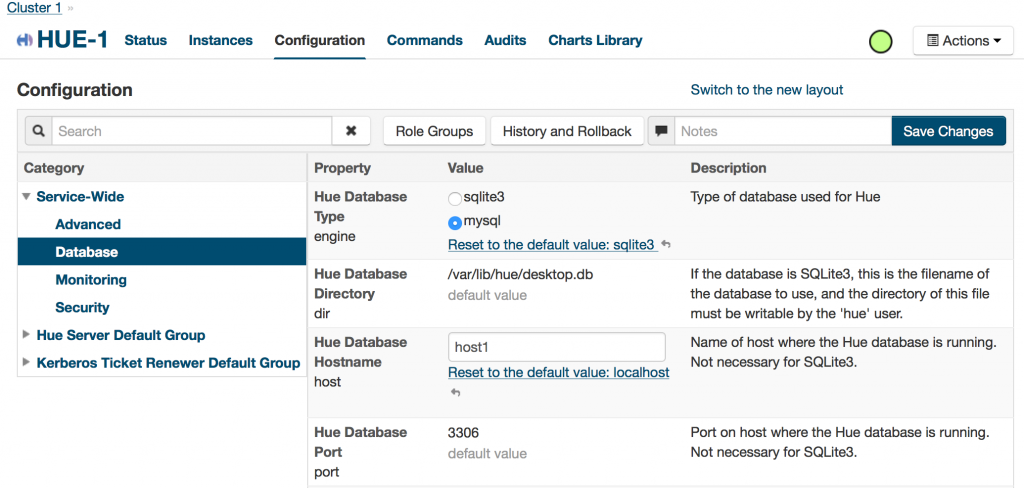

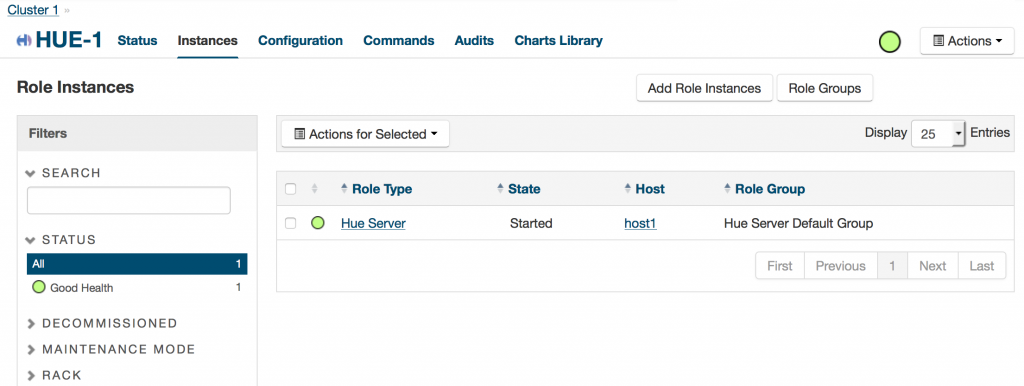

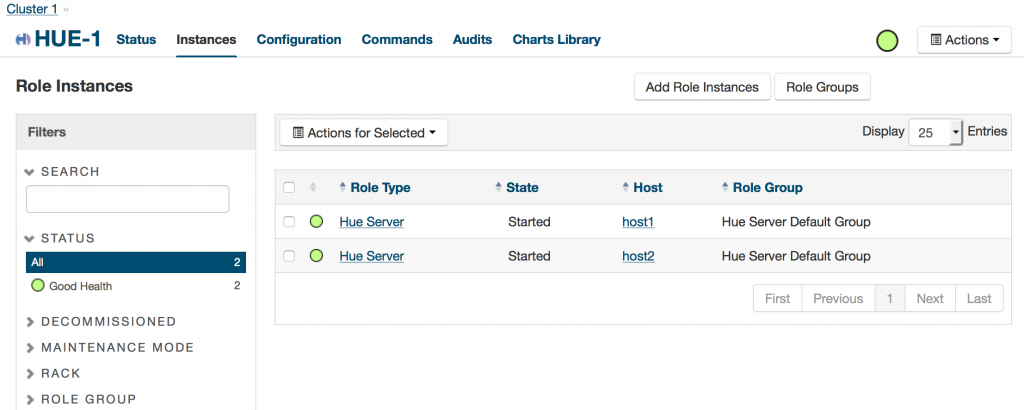

4. Go to “Hue -> Configuration -> Database” and enter in the database connection information, and save. 5. Go to “Hue -> Instances -> Add a Role Instance”

5. Go to “Hue -> Instances -> Add a Role Instance” 6. Select “Hue” and select which services you would like to expose on Hue. If you are using Kerberos, make sure to also add a “Kerberos Ticket Renewer” on the same machine as this new Hue role.

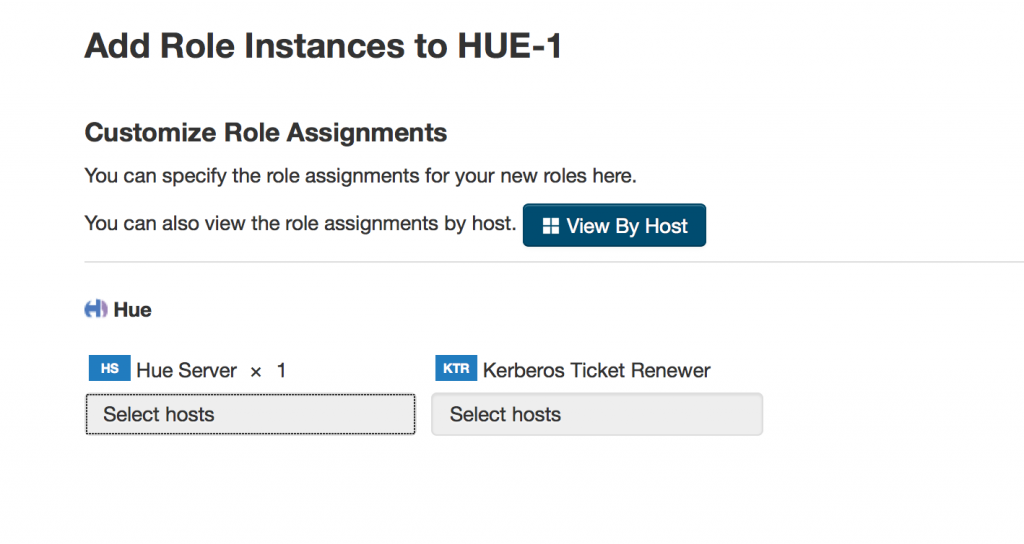

6. Select “Hue” and select which services you would like to expose on Hue. If you are using Kerberos, make sure to also add a “Kerberos Ticket Renewer” on the same machine as this new Hue role. 7. On “Customize Role Assignments”, add at least one other “Hue Server” instance another machine.

8. Start the new Hue Server.

7. On “Customize Role Assignments”, add at least one other “Hue Server” instance another machine.

8. Start the new Hue Server.

Installing the Dependencies

On a Redhat/Fedora-based system:

% sudo yum install git nginx haproxy python python-pip

% pip install virtualenv

On a Debian/Ubuntu-based system:

% sudo apt-get install git nginx haproxy python python-pip

% pip install virtualenv

Running the load balancers

First we want to start the load balancer:

% cd $HUE_HOME_DIR/tools/load-balancer

Next we install the load balancer specific dependencies in a python virtual environment to keep those dependencies from affecting other projects on the system.

% virtualenv build

% source build/bin/activate

% pip install -r requirements.txt

Finally, modify etc/hue-lb.toml to point at your instance of Cloudera Manager (as in “cloudera-manager.example.com” without the port or “http://"), and provide a username and password for an account that has read access to the Hue state.

Now we are ready to start the load balancers. Run:

% ./bin/supervisord

% ./bin/supervisorctl status

haproxy RUNNING pid 36920, uptime 0:00:01

monitor-hue-lb RUNNING pid 36919, uptime 0:00:01

nginx RUNNING pid 36921, uptime 0:00:01

You should be able to access Hue from either http://HUE-LB-HOSTNAME:8000 for NGINX, or http://HUE-LB-HOSTNAME:8001 for HAProxy. To demonstrate the that it’s load balancing:

- Go into Cloudera Manager, then “Hue”, then “Instances”.

- Stop the first Hue instance.

- Access the URL and verify it works.

- Start the first instance, and stop the second instance.

- Access the URL and verify it works

Finally, if you want to shut down the load balancers, run:

% ./bin/supervisorctl shutdown

Automatic Updates from Cloudera Manager

The hue load balancer uses Supervisor, a service that monitors and controls other services. It can be configured to automatically restart services if they crashed, or trigger scripts if certain events occur. The load balancer starts and monitors the NGINX or HAProxy through another process named monitor-hue-lb. It accomplishes this through the use of Cloudera Manager API to access the status of Hue in Cloudera Manager, and automatically add and remove Hue from the load balancers. If it detects that a new Hue instances has been added or removed, it updates the configuration of all the active load balancers and triggers them to reload without dropping any connections.

Sticky Sessions

Both NGINX and HAProxy are configured to route users to the same backend, otherwise known as sticky sessions. This is both done for performance issues as it’s more likely the Hue backend will have the user’s data cached in the same Hue instance, but also because Impala currently does not yet support native high availability (IMPALA-1653). This means that the underlying Impala session opened by one Hue instance cannot be accessed by another Hue instance. By using sticky sessions, users will be always routed to the same Hue instance, so they will be able to still access their Impala sessions. That is, of course, assuming that Hue instance is still active. If not, the user will be routed to one of the other active Hue sessions.

Next steps (for C6) will be to make all the above done with one click in Cloudera Manager by shipping a parcel with all the dependencies (or downloading them automatically) and adding a new ‘HA’ role in the Hue service.

Have any questions? Feel free to contact us on hue-user or @gethue!